Biography

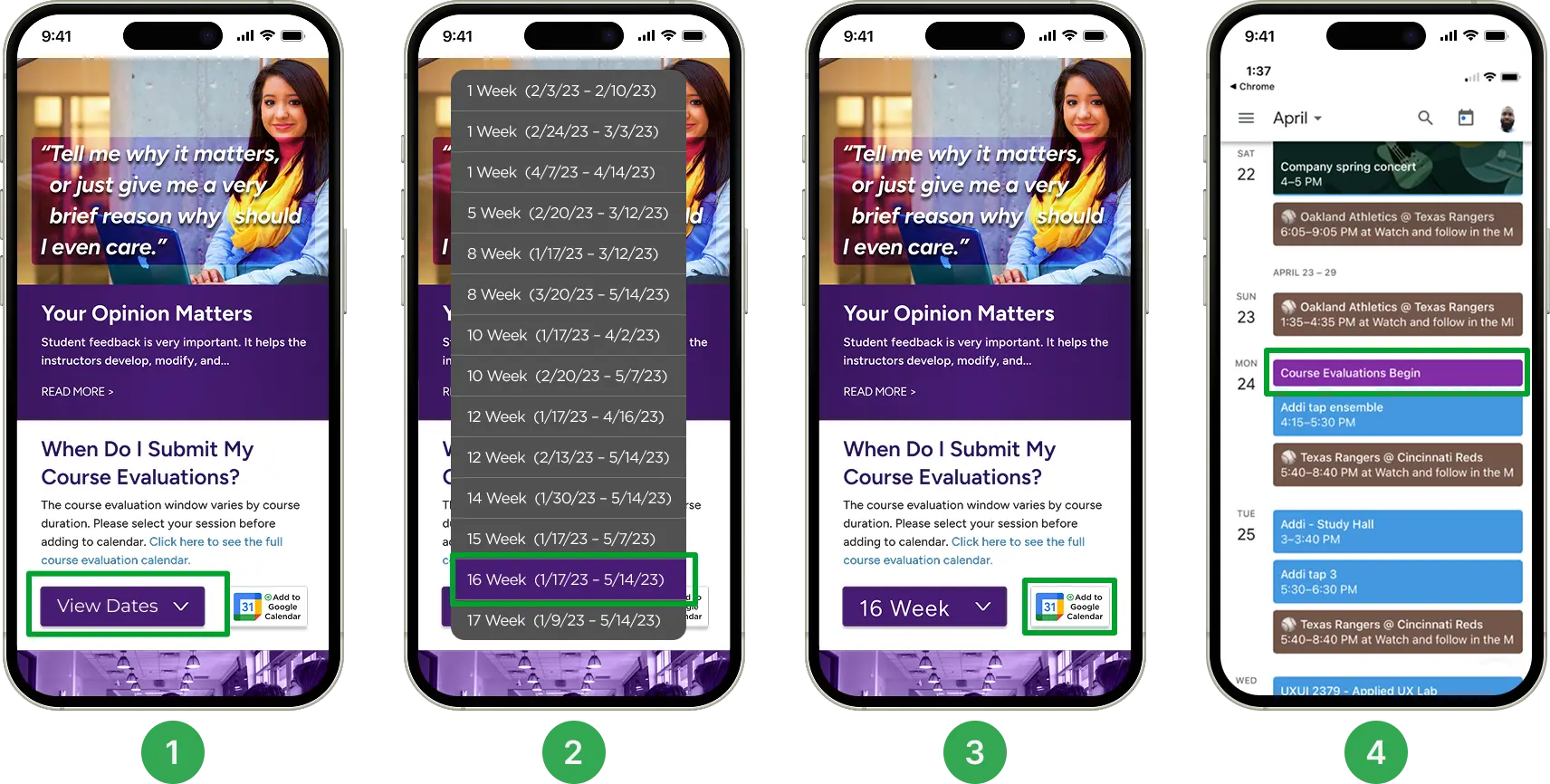

Michelle is a 24-year-old student. She's a double major who is very busy with all her classes, family life social obligations. She values being able to put important dates on her calendar.

Goals

- Possessing a clear plan & structure for tasks

- Desires to give back to ACC and help those coming after her

- View time as her most valuable resource

Frustrations

- Occasionally forgets to add things to their calendar or to do list

- Has tendency to multi-task leading to task triage

- At times needs extra input to understand how long a task will take

Biography

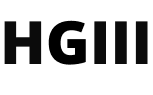

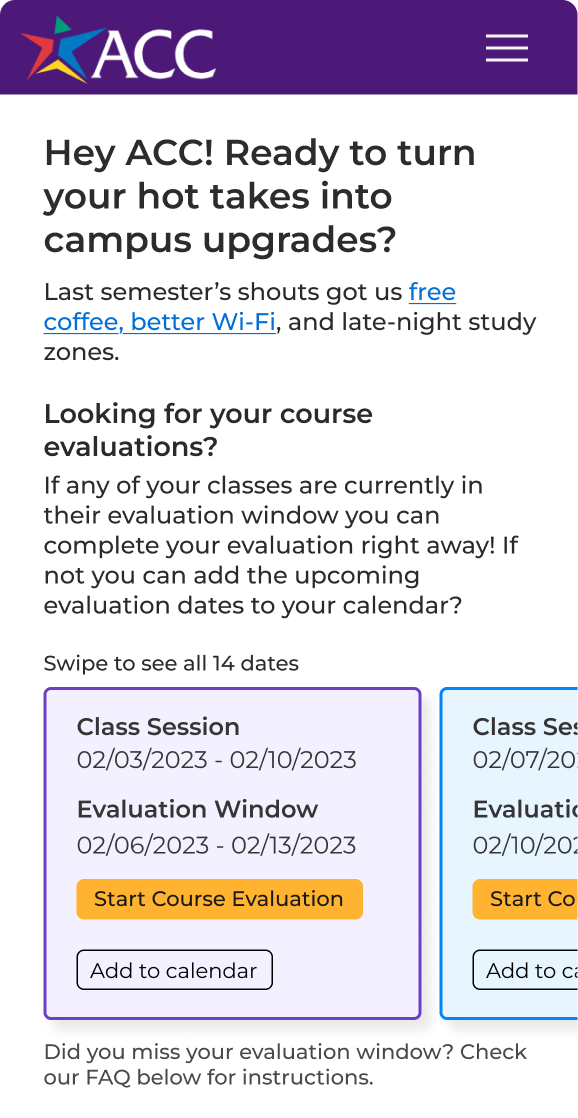

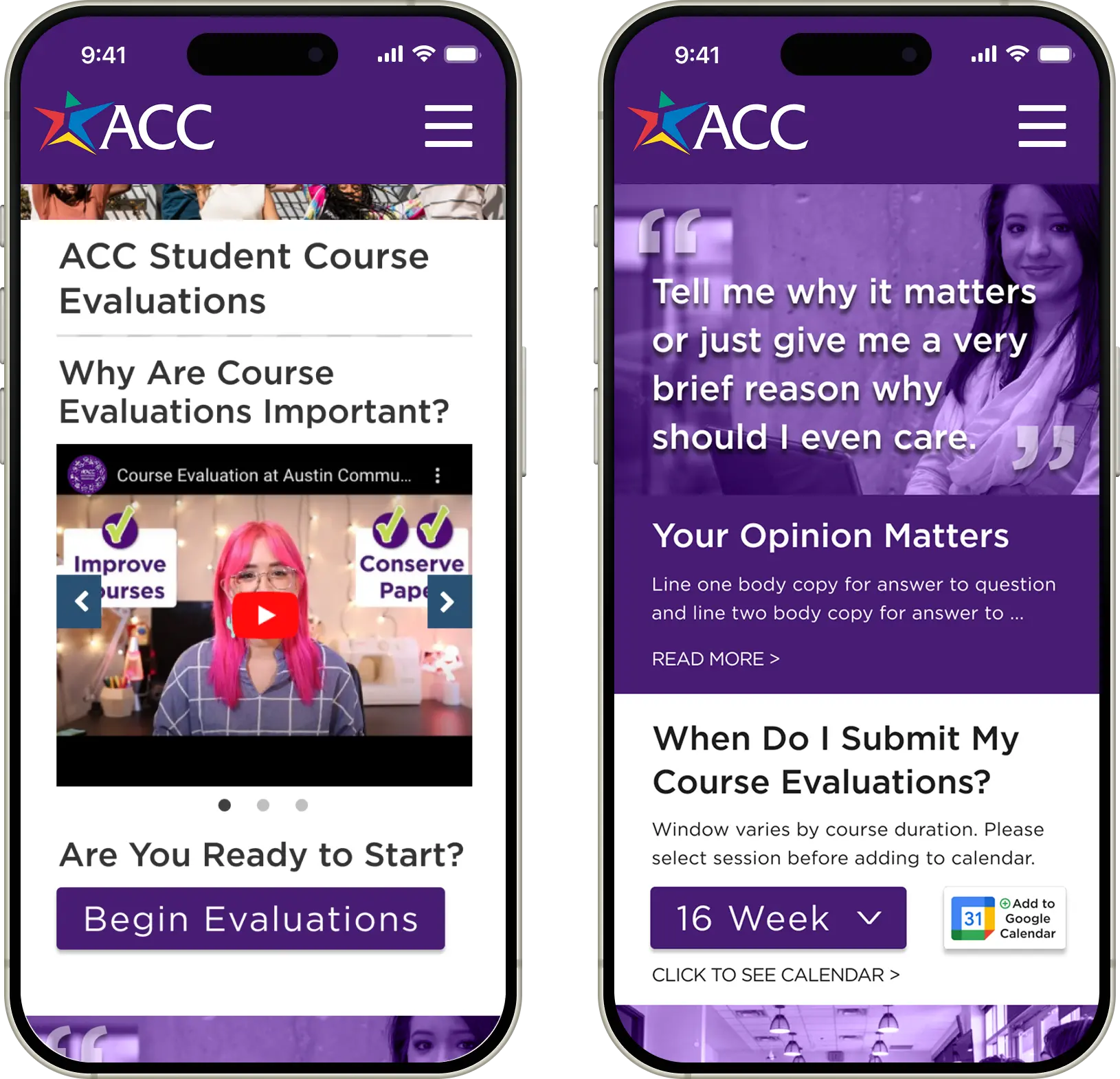

Taylor is a 21-year-old first generation student who spends most of their time on the go and values mobile first interactions.

Goals

- The experiences needs to have a little friction as possible

- To be aware of the latest events within their community

- Representation is a paramount concern

Frustrations

- May miss due to a constant on the go schedule

- Impatient with task and likes to move quickly

- Critical of experiences & expects them to be well crafted

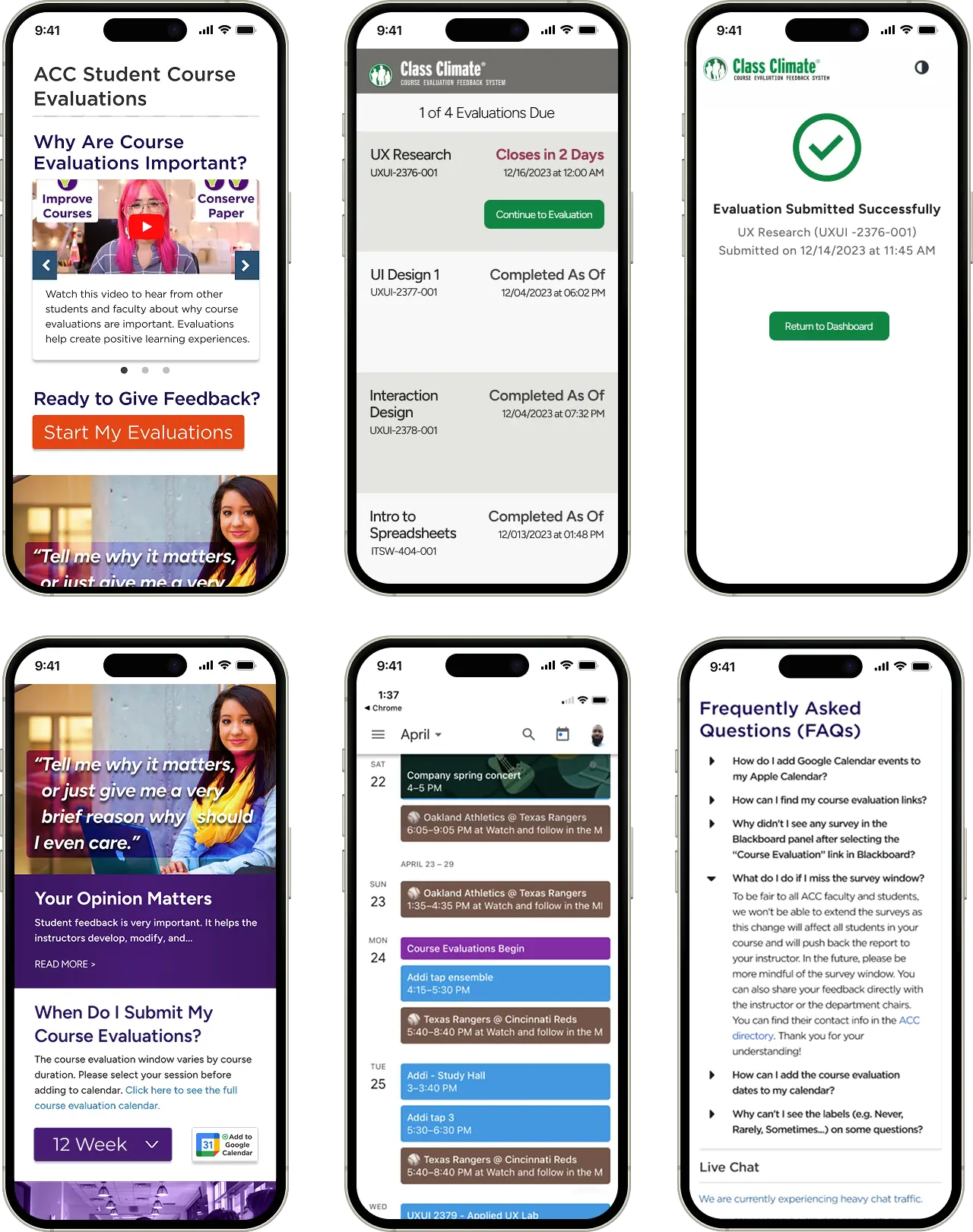

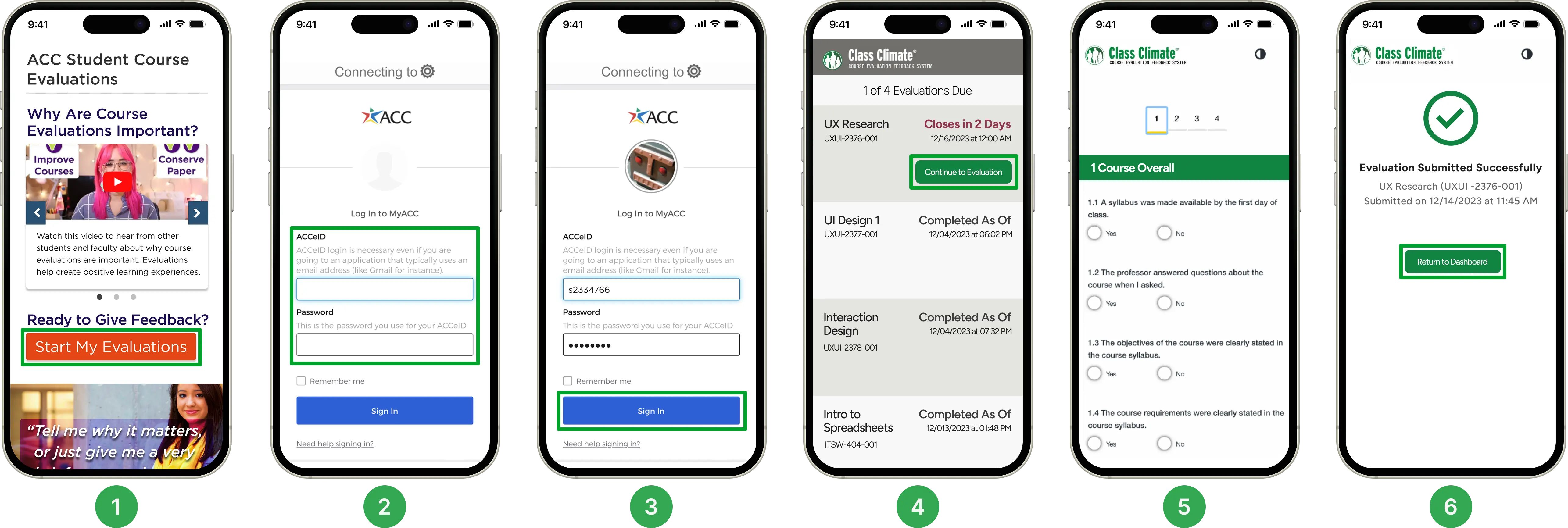

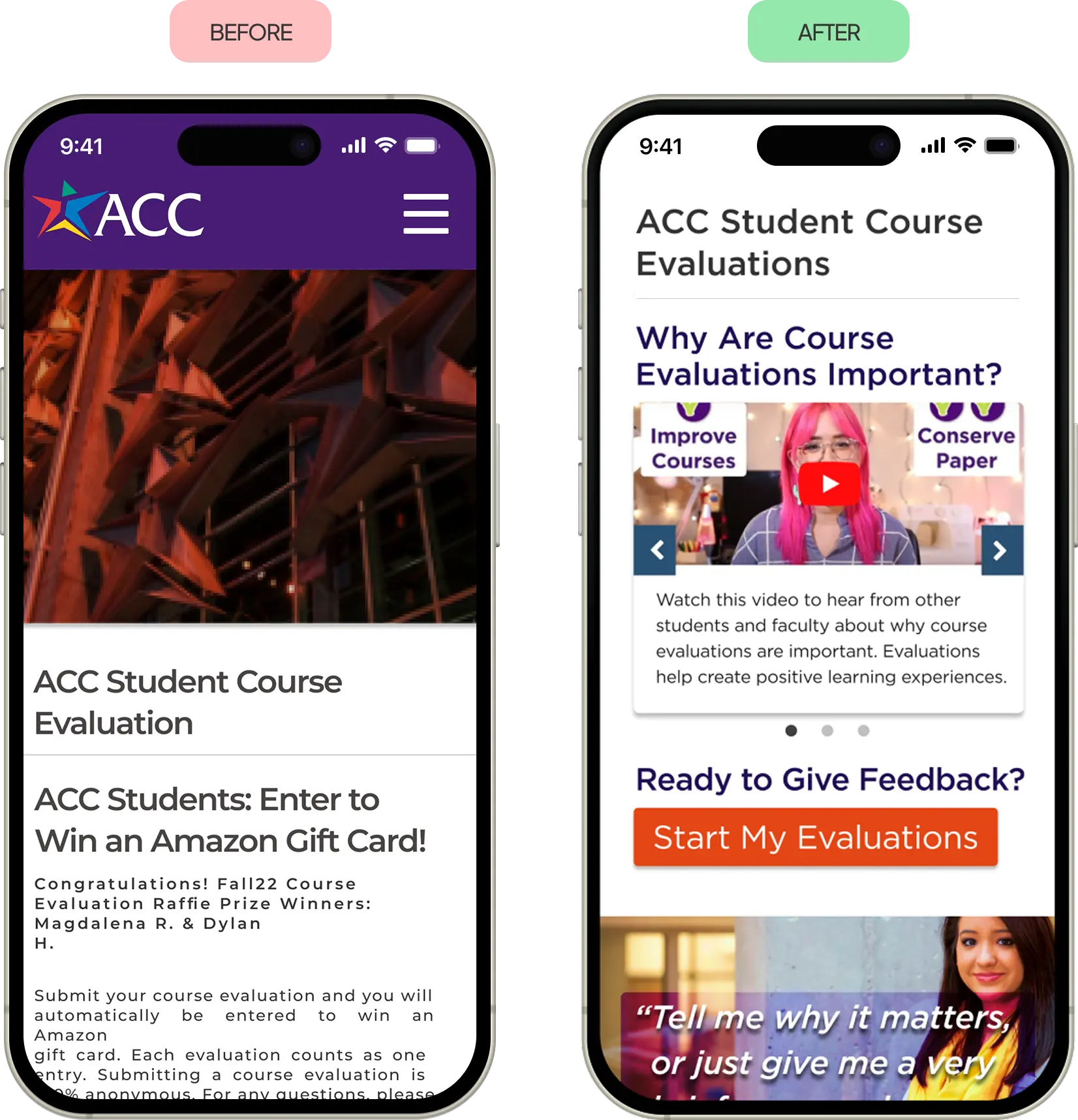

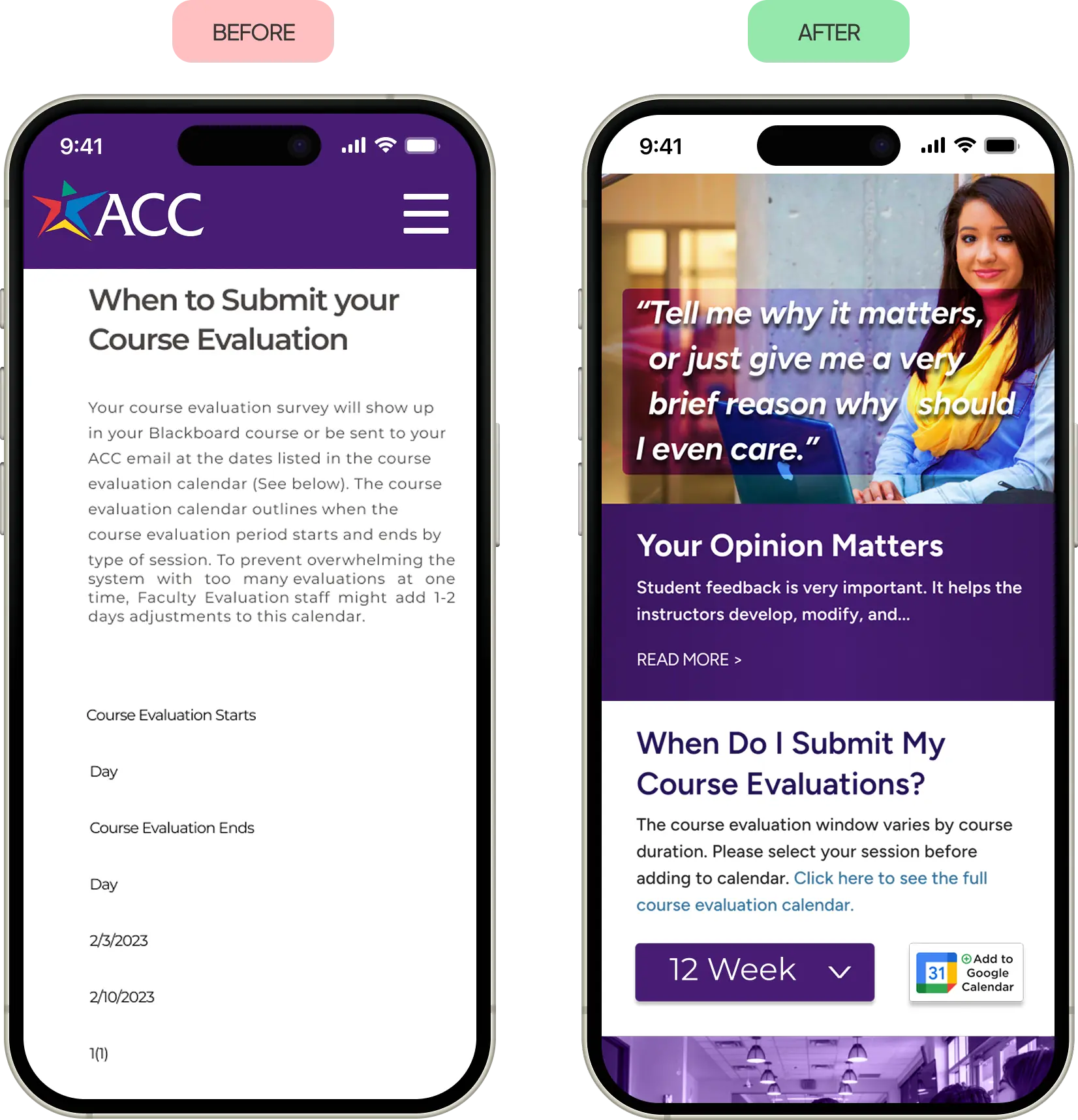

User Journey Map

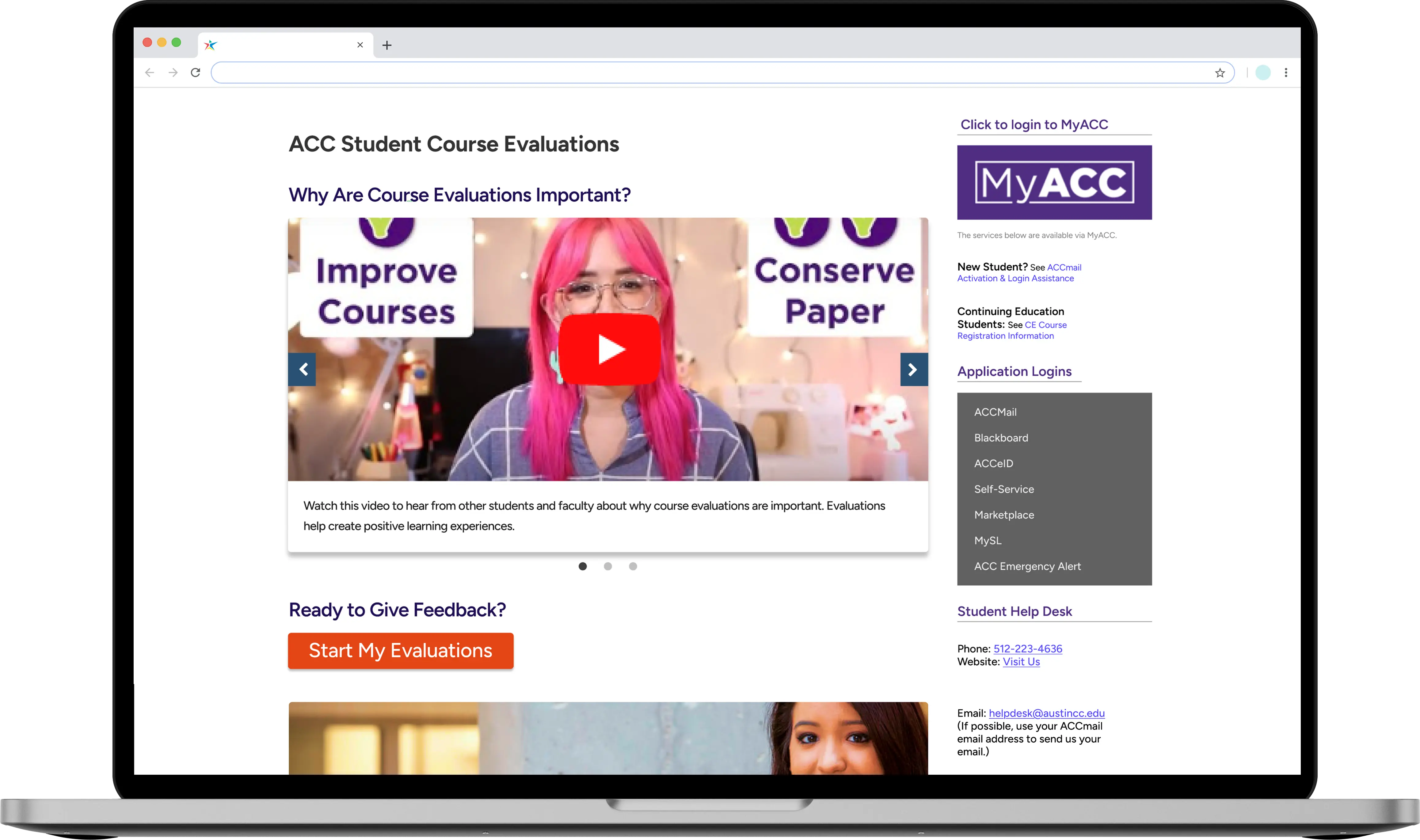

Right after defining our personas, we mapped the student's end-to-end experience—from the moment they learn an evaluation is due (via professor announcement or email) through selecting an access method (Blackboard vs. email link) to finally completing the form.